Authors:

(1) Dustin Podell, Stability AI, Applied Research;

(2) Zion English, Stability AI, Applied Research;

(3) Kyle Lacey, Stability AI, Applied Research;

(4) Andreas Blattmann, Stability AI, Applied Research;

(5) Tim Dockhorn, Stability AI, Applied Research;

(6) Jonas Müller, Stability AI, Applied Research;

(7) Joe Penna, Stability AI, Applied Research;

(8) Robin Rombach, Stability AI, Applied Research.

Table of Links

2.4 Improved Autoencoder and 2.5 Putting Everything Together

Appendix

D Comparison to the State of the Art

E Comparison to Midjourney v5.1

F On FID Assessment of Generative Text-Image Foundation Models

G Additional Comparison between Single- and Two-Stage SDXL pipeline

B Limitations

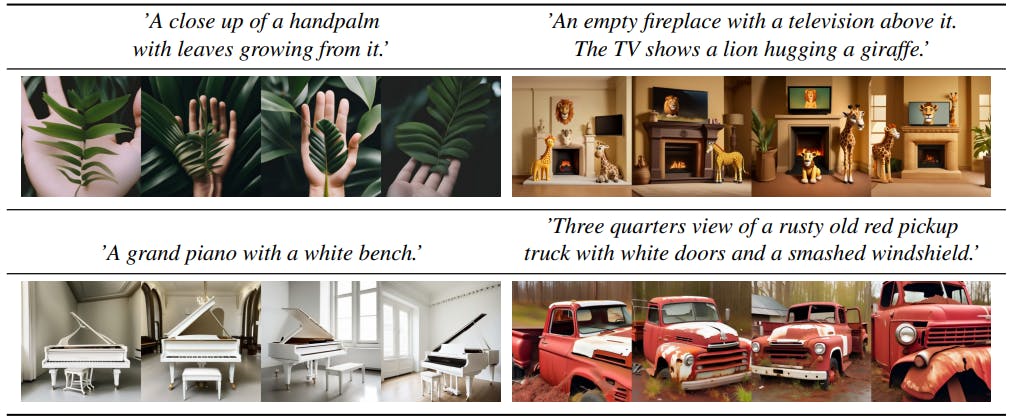

![Figure 7: Failure cases of SDXL despite large improvements compared to previous versions of Stable Diffusion, the model sometimes still struggles with very complex prompts involving detailed spatial arrangements and detailed descriptions (e.g. top left example). Moreover, hands are not yet always correctly generated (e.g. top left) and the model sometimes suffers from two concepts bleeding into one another (e.g. bottom right example). All examples are random samples generated with 50 steps of the DDIM sampler [46] and cfg-scale 8.0 [13].](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-wr8300v.png)

While our model has demonstrated impressive capabilities in generating realistic images and synthesizing complex scenes, it is important to acknowledge its inherent limitations. Understanding these limitations is crucial for further improvements and ensuring responsible use of the technology.

Firstly, the model may encounter challenges when synthesizing intricate structures, such as human hands (see Fig. 7, top left). Although it has been trained on a diverse range of data, the complexity of human anatomy poses a difficulty in achieving accurate representations consistently. This limitation suggests the need for further scaling and training techniques specifically targeting the synthesis of fine-grained details. A reason for this occurring might be that hands and similar objects appear with very high variance in photographs and it is hard for the model to extract the knowledge of the real 3D shape and physical limitations in that case.

Secondly, while the model achieves a remarkable level of realism in its generated images, it is important to note that it does not attain perfect photorealism. Certain nuances, such as subtle lighting effects or minute texture variations, may still be absent or less faithfully represented in the generated images. This limitation implies that caution should be exercised when relying solely on model-generated visuals for applications that require a high degree of visual fidelity.

Furthermore, the model’s training process heavily relies on large-scale datasets, which can inadvertently introduce social and racial biases. As a result, the model may inadvertently exacerbate these biases when generating images or inferring visual attributes.

In certain cases where samples contain multiple objects or subjects, the model may exhibit a phenomenon known as “concept bleeding”. This issue manifests as the unintended merging or overlap of distinct visual elements. For instance, in Fig. 14, an orange sunglass is observed, which indicates an instance of concept bleeding from the orange sweater. Another case of this can be seen in Fig. 8, the penguin is supposed to have a “blue hat” and “red gloves”, but is instead generated with blue gloves and a red hat. Recognizing and addressing such occurrences is essential for refining the model’s ability to accurately separate and represent individual objects within complex scenes. The root cause of this may lie in the used pretrained text-encoders: firstly, they are trained to compress all information into a single token, so they may fail at binding only the right attributes and objects, Feng et al. [8] mitigate this issue by explicitly encoding word relationships into the encoding. Secondly, the contrastive loss may also contribute to this, since negative examples with a different binding are needed within the same batch [35].

Additionally, while our model represents a significant advancement over previous iterations of SD, it still encounters difficulties when rendering long, legible text. Occasionally, the generated text may contain random characters or exhibit inconsistencies, as illustrated in Fig. 8. Overcoming this limitation requires further investigation and development of techniques that enhance the model’s text generation capabilities, particularly for extended textual content — see for example the work of Liu et al. [27], who propose to enhance text rendering capabilities via character-level text tokenizers. Alternatively, scaling the model does further improve text synthesis [53, 40].

In conclusion, our model exhibits notable strengths in image synthesis, but it is not exempt from certain limitations. The challenges associated with synthesizing intricate structures, achieving perfect photorealism, further addressing biases, mitigating concept bleeding, and improving text rendering highlight avenues for future research and optimization.

This paper is available on arxiv under CC BY 4.0 DEED license.